Statistical inference and hypothesis testing as the basis for accurate simulation

Simulation modeling is only as powerful as the assumptions it is built on – that is, without a sound representation of the target system, simulation doesn’t have much utility. To be able to uncover the full potential of simulation, businesses need to have a quantitative understanding of the processes or systems they are looking to model.

Although seemingly simple, quantifying the properties of an environment can be challenging because businesses often don’t have comprehensive knowledge of the statistical properties of their systems. This lack of information is called uncertainty.

When uncertainty in data rears its ugly head, one of the things we can do is use statistics and probability. And when it comes to inferring the quantitative properties of a target process, statistical inference and hypothesis testing can go a very long way.

The importance of statistical inference in simulation

Statistical inference is a family of methods that are aimed at helping researchers identify the statistical properties of a process or system. More specifically, statistical inference is concerned with the inference of the properties of the probability distribution(s) underlying a process of interest.

The need for statistical inference in simulation is dictated by the fact that researchers very often do not have all-inclusive knowledge about the statistical properties of an observed process. Statistical inference tackles this problem by allowing researchers to estimate unknown properties based on limited sets of data.

Within the context of software delivery simulation, statistical inference could be used to estimate:

- How much time, on average, it would take software developers to produce artifacts.

- The maximum and minimum amounts of time necessary to produce artifacts.

- The failure rate of code quality checks.

- The correlation between different variables, such as between artifact production duration and quality check failure.

These are only some of the areas where statistical inference may be used – in practice, statistical inference methods may be adapted to any process or part thereof whose properties need to be estimated.

Needless to say, if we had access to all statistics relevant to our task – or, in the language of statistics, possessed data from the entire population – we would be able to come up with extremely accurate estimates. However, in real life, researchers can very rarely obtain data relating to an entire population. Usually, this is because of:

- Budget constraints.

- Time constraints.

- Inaccessibility of data.

To handle these limitations, statistical inference is typically carried out only on a subset of relevant data from the target system. Although working with subsets of data may not allow researchers to compute the actual values of the properties of interest, it is often sufficient for obtaining adequately accurate estimates.

Statistical hypothesis testing and its eight steps

A method of statistical inference, hypothesis testing is concerned with helping researchers prove or disprove a hypothesis about a property of an observed system.

Although the focus of this post is on simulation, the main steps and methods of statistical hypothesis testing are basically the same regardless of the industry. The rules of hypothesis testing can be adapted to various fields.

With that, hypothesis testing generally follows this eight-step formula:

1. Stating the problem. The first step is identifying the property to be tested and the problem to be solved. The problem formulation can come down to a question, such as “do longer working weeks increase the failure rate of code quality checks?”

2. Stating the null hypothesis. The null hypothesis, designated_H0_, is a default hypothesis stating that the observed quantity is zero. For example, if we were trying to estimate the correlation between code quality and longer working weeks, the null hypothesis would state that increases in weekly workload don’t affect the quality of produced artifacts. Typically, hypothesis tests aim to reject the null hypothesis.

3. Stating the alternative hypothesis. The alternative hypothesis, designated_HA_, is the hypothesis that we are trying to confirm. Continuing our previous example, the alternative hypothesis could state that there is a negative correlation between longer working weeks and code quality.

4. Setting α. α(called the critical value or significance level)is the probability of the study rejecting the null hypothesis when the null hypothesis is true. Lower αvalues indicate that more convincing results are necessary for the rejection of H0. A typical value for α is 0.05, or 5%.

5. Collecting data. This step involves the collection of relevant data for the study. Data may be collected either through observation or experimentation, whichever method is more suitable or available.

6. Calculating a test statistic and the p-value. At this step, the calculation of a test statistic (like the Z-test, t-test, the chi-squared test, or Pearson’s correlation coefficient) and the associated p-value occurs. The p-value is the probability of the observed test statistic occurring by chance if the null hypothesis is true, and it is the value that is compared with α to make conclusions about the null hypothesis. The p-value can be looked up from the p-value table of the used test statistic or calculated with statistical software.

7. Constructing acceptance/rejection regions. To be able to reject or accept the null hypothesis, it is necessary to construct rejection/acceptance areas for the used test statistic. These regions are constructed based on the critical values of the distribution used to approximate the null hypothesis.

8. Drawing conclusions about the null hypothesis. If the obtained p-value is equal to or smaller than α, the null hypothesis is rejected. Essentially, a low p-value signifies that the result is very unlikely to be due to chance. When rejection occurs, it is said that the null hypothesis is rejected with 1 –α of confidence.

Errors in statistical hypothesis testing

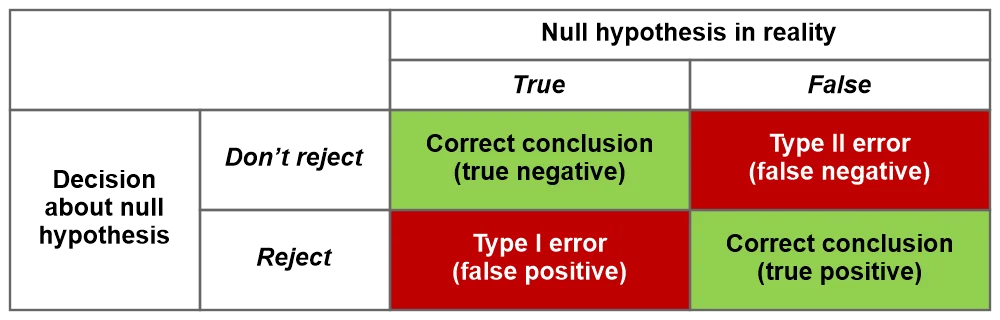

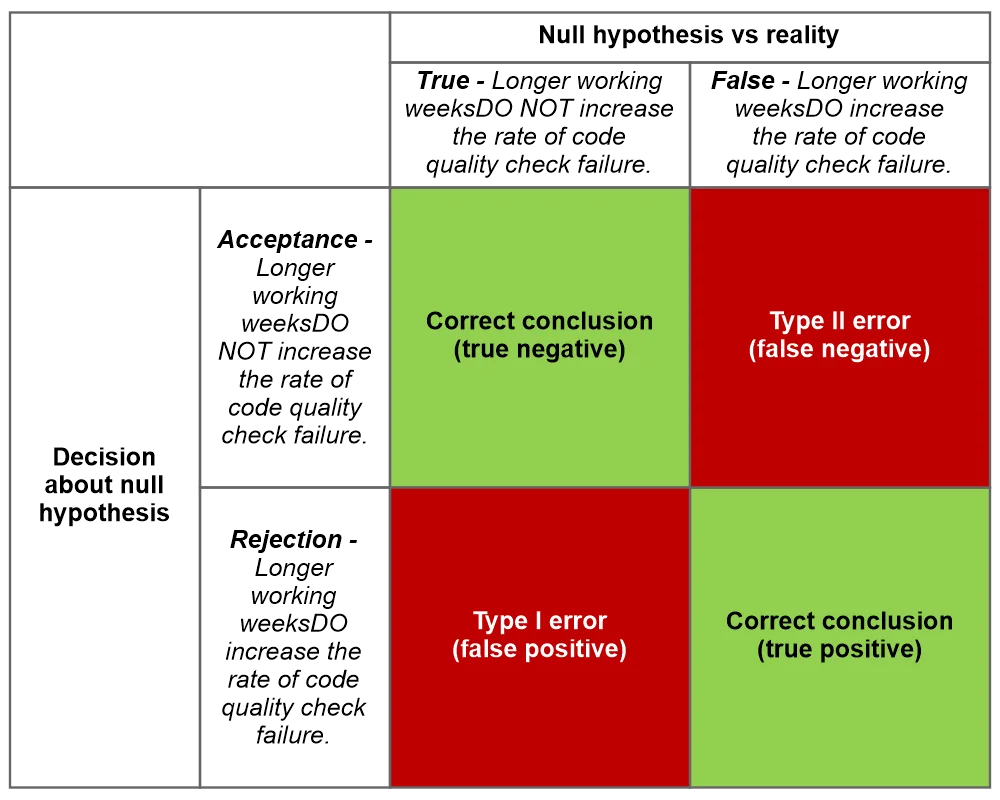

At a high level, depending on the correctness of the results of hypothesis testing, the following four outcomes are possible:

Hypothesis testing outcomes

Hypothesis testing outcomes

In statistics:

- Type I error (or false positive) is the rejection of a null hypothesis that is actually true.

- Type II error (or false negative) is the acceptance of a null hypothesis that is actually false.

Both types of error are highly undesirable, but depending on the context, one may be more disastrous than the other.

To demonstrate this, let’s once again consider our null hypothesis example:

Longer working weeks DO NOT increase the rate of code quality check failure.

The possible conclusions for this null hypothesis are as follows:

Hypothesis conclusions

Hypothesis conclusions

If the null hypothesis is accepted when it’s not actually true (type II error or false negative) and software developers’ workload is increased in the hopes of completing the project faster, code quality may decrease due to fatigue in employees.

In contrast, if the null hypothesis is actually true but is rejected (type I error or false positive), we may not see consequences as drastic. More precisely, we can point out two possible outcomes:

- Believing that increased workload causes fatigue and hence worse code quality, we would not require software developers to work more, which could at least allow us to retain current productivity levels.

- On the other hand, we would be missing out on potential increases in productivity, thinking that increases in weekly workload would negatively affect code quality. Whereas in our particular example, no negative changes would be observed.

So with this example, type II error may be more impactful as it is very likely to negatively affect code quality and productivity.

Nonetheless, erroneous conclusions should be avoided because they can:

- In the best case, prevent us from achieving better productivity.

- In the worst case, worsen productivity.

To hopefully minimize the risk of error, research teams should keep the following two points in mind when constructing a statistical hypothesis test:

- If the desired outcome is the rejection of the null hypothesis, the test statistic should reject it with high probability.

- If the desired outcome is the retention of the null hypothesis, the test statistic should retain it with high probability.

Data can also have a major impact on the validity of hypothesis testing, meaning that research teams should pay particular attention to what they are using in the study.

Next steps

This post didn’t delve into the very depths of statistical hypothesis testing – rather, it covered the basics to help businesses gain a better understanding of what makes a successful simulation. Although a high-level understanding of statistical inference and hypothesis testing is important, businesses don’t need to hire entire research teams to carry them out.

In modern business, hypothesis testing isn’t something that needs to be done by hand. Teams may leverage the vast range of statistical software packages to assess their hypotheses without having to do the math by hand. Programming languages like Python or R have libraries to facilitate hypothesis testing as well.