Closing the loop - simulation validation in software delivery

"…simulations are opaque in that they include too many computations and thus cannot be checked by hand, this doesn’t exhaust what we might want to call the opacity of simulations… the opacity of a method is its disposition to resist knowledge and understanding."

"…philosophers have tried to show that opacity doesn’t compromise the ability of computer simulations to achieve their tasks…[some] have argued that, despite being opaque, computer simulations can produce knowledge…[and]…understanding."

—Claus Beisbart (2021).

The full simulation lifecycle can be both time-consuming and resource-intensive. It can be tempting to take action as soon as seemingly reasonable simulation results arise. However, before the simulation is used to direct your decision-making, a validation phase should be performed to “close the loop.”

Validation is an integral part of any simulation project, and skipping or neglecting it could lead to severe consequences. Simulation exercises encompass uncertainty, complexity, and incomplete information. This post will introduce the purpose and importance of simulation validation, as well as outline several ways to carry it out. Such an exercise can reduce uncertainty, manage complexity, and minimize incomplete information.

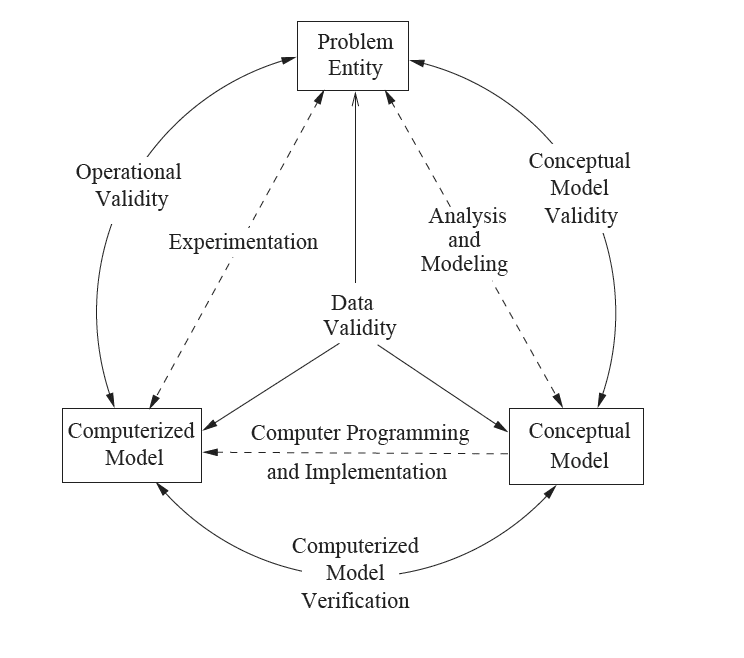

We start by defining validation as the “substantiation that a computerized model within its domain of applicability possesses a satisfactory range of accuracy consistent with the intended application of the model” (Schlesinger, 1979, p. 103). We’ll contrast the definition of validation with Sargent’s (1999, December, p. 39) definition for verification as “ensuring that the computer program of the computerized model and its implementation are correct.”

What is simulation validation in software delivery?

Simulation validation in software delivery refers to the assessment of the simulation’s performance against the real-world software delivery system. Validation ensures that the simulation is capable of modeling the system with sufficient accuracy. Simulation validation is complementary to simulation verification. In contrast to validation, the goal of verification is to ensure that the simulation meets the specifications of the conceptual model – in other words, ensure the simulation has been implemented correctly.

Purpose-built simulators (such as the Software Delivery Simulator) typically handle simulation verification for the user. Since specific simulations are built to satisfy a purpose, then validity is determined in terms of that purpose. As for validation, team and stakeholder efforts are required across the stages of validation conceptualization, planning, and execution.

Hocks, et al. (2015, p. 020905-2) suggested breaking down the validation and verification process for computer simulations into seven stages:

- Formulate a research question that a model and simulation can answer.

- Prototype your methods and create a verification and validation plan.

- Verify your software.

- Validate your results by comparing your model and simulation to independent experiments and other models.

- Test the robustness of the study by evaluating the sensitivity of your results to model parameters and other modeling choices.

- Document and share your model and simulation.

- Generate predictions and hypotheses that can be tested in the real world.

Why verification is insufficient for determining simulation validity

Up until the point of validation, software delivery simulation is performed in relative isolation from reality. In a simulation environment, the team can tightly control variables and come up with what appears to be a convincing representation of the target system. The simulation has been fine-tuned in a “sterile” and well-controlled environment.

The predicted outcomes can never be computed with absolute accuracy, especially without the external observation of facts in the real world. How can the team determine if the simulation will handle the modeled system’s more chaotic and unpredictable reality?

The team has carefully evaluated the problems and routes to overcome them. Risk always exists where the model doesn’t comprehensively and/or accurately represent the real-world system. Remember, models as strictly metaphors describing reality and thus, are constrained in the description of reality.

“The word ‘model’ … indicates a schematic representation of reality connecting the main quantities by laws which take the form of mathematical equations. In this formulation models are mathematical models; they pose qualitative questions, sometimes extremely difficult ones, and at the same time their nature is essentially quantitative. By producing figures and numbers we obtain a quantitative approximation of reality which is as accurate as possible. Mathematical models are never to be confused with reality itself, they represent a very simplified view of an artificially isolated part of reality. A ‘better model’ means a better approximation of part of reality but reality itself is always something fundamentally different.”

– Ferdinand Verhulst (1999, p. 31).

Miscalculations and errors – no matter how small – will creep into the final simulation results. The result will be damage to the simulation’s legitimacy and credibility. This outcome could prevent the team from taking associated actions for system transformation or process improvement. Additionally, if the simulated system undergoes significant changes since the simulation’s conceptualization, the team may need to scrap all the work and go back to the drawing board.

The model variables used to satisfy the purpose need to have the level of accuracy identified and specified before developing the model. Validation done right helps the team confirm that the efforts have implemented the correct solution to the right problem. Validation also helps build credibility and trust in the simulation.

How simulation validation may be performed

Many ways to perform simulation validation are available. None of them can be confidently deemed the best or correct for all situations, so the team will need to choose the right validation strategy for your project. Sargent (1999, December, p. 41) proposed a simplified model that demonstrates the relationships between verification and different types of validation (see Figure 1).

Limited rollout

Perhaps the most reliable avenue for simulation validation is limited rollout to a select number of teams. Limited initial rollout facilitates the analysis of the actionability and accuracy of the simulation results – out in the wild. For the pilot group, simulation predictions simply need to be compared with the realized outcomes of the system.

Partition the input modeling data

Another effective strategy involves setting aside part of the data used in simulation development for validation purposes. For instance, 80% of the data could be used for input modeling and configuration tuning. Consequently, the simulation’s output would be compared with the remaining 20% of the data. This approach is common in machine learning, where AI models are only trained on a subset of the data. The rest is again put aside for validation.

Other notable simulation validation methods are as follows:

- Comparison to existing models. If similar existing simulations are known to be able to validly model the target system, compare their output with the team’s simulation results.

- Parameter variability-sensitivity analysis. Parameter variability-sensitivity analysis is performed by changing the model’s internal parameters and input data to determine how the changes affect the model’s behavior and the simulation results. The relationships between the changed parameters and the associated system outputs should align across both the simulation and the modeled system.

- Face validity assessment. Face validity refers to the reasonableness of the simulation’s behavior and is determined through careful assessment by individuals knowledgeable about the real system. A model is said to have face validity when its output is consistent with expected system behavior.

- Making a subjective decision of model validity made by the development team based upon the outcomes of a range of tests and evaluations conducted as an integral part of the model development process.

- Independent, 3rd party verification and validation, although exceedingly costly and time-consuming. The third party is organizationally separate from the model development team and the key stakeholders, sponsors, and users.

- A scoring model can support the validation of a model. Although subjective, scores are assigned to various categories of the validation process. When combined, the overall score determines validity when the underlying scores exceed a specified passing score.

Next steps

Which method of software delivery simulation validation to choose depends on many factors. Ideally, the simulation should be validated from multiple angles to obtain a more complete picture of its performance. However, this may not always be practical, whether due to the nature of your project or due to time and budget constraints. Roungas, et al. (2017) discovered 64 verification and validation tools, of which 46 were relevant to validation:

- Acceptance Testing

- Alpha Testing

- Beta Testing

- Comparison Testing

- Compliance Testing

- Data Analysis Techniques

- Data Interface Testing

- Documentation Checking

- Equivalence Partitioning Testing

- Execution Testing

- Extreme Input Testing

- Face Validation

- Fault/Failure Analysis

- Fault/Failure Insertion Testing

- Field Testing

- Functional (Black-Box) Testing

- Graphical Comparisons

- Induction

- Inference

- Inspections

- Invalid Input Testing

- Logical Deduction

- Model Interface Analysis

- Model Interface Testing

- Object-Flow Testing

- Partition Testing

- Predictive Validation

- Product Testing

- Proof of Correctness

- Real-Time Input Testing

- Reviews

- Self-Driven Input Testing

- Sensitivity Analysis

- Stress Testing

- Structural (White-Box) Testing

- Structural Analysis

- Submodel/Module Testing

- Symbolic Evaluation

- Top-Down Testing

- Trace-Driven Input Testing

- Traceability Assessment

- Turing Test

- User Interface Analysis

- User Interface Testing

- Visualization/Animation

- Walkthroughs

We do not suggest the team considers all these approaches, but the team needs to be aware that validation is neither a simple task nor a task too complex to be useful. Choose selectively. Pace (2004) presents a very simplified introductory perspective of verification and validation to help the team establish the terminology and conceptual framework necessary to begin. Regardless of the validation approach selected, planning validation beforehand will allow you to set up the simulation project for success.

References:

Hicks, J. L., Uchida, T. K., Seth, A., Rajagopal, A., & Delp, S. L. (2015). Is my model good enough? Best practices for verification and validation of musculoskeletal models and simulations of movement. Journal of Biomechanical Engineering, 137(2), pp. 020905-1–020905-24.

Pace, D. K. (2004). Modeling and simulation verification and validation challenges. Johns Hopkins APL Technical Digest, 25(2), 163-172.

Roungas, B., Meijer, S., & Verbraeck, A. (2017). A framework for simulation validation & verification method selection. In A. Ramezani, E. Williams, & M. Bauer (Eds.), Proceedings of the 9th International Conference on Advances in System Simulation, SIMUL 2017 (pp. 35-40).

Sargent, R. G. (1999, December). Validation and verification of simulation models. In WSC'99. 1999 Winter Simulation Conference Proceedings: Simulation-A Bridge to the Future (Cat. No. 99CH37038) (Vol. 1), pp. 39-48. IEEE.

Schlesinger, S. (1979). Terminology for Model Credibility, Simulation, 32(3), pp. 103–104.

Verhulst, F. (1999). The validation of metaphors. In D. DeTombe, C. van Dijkum, & E van Kuijk, Validation of Simulation Models. Amsterdam: SISWO. pp. 30-44.